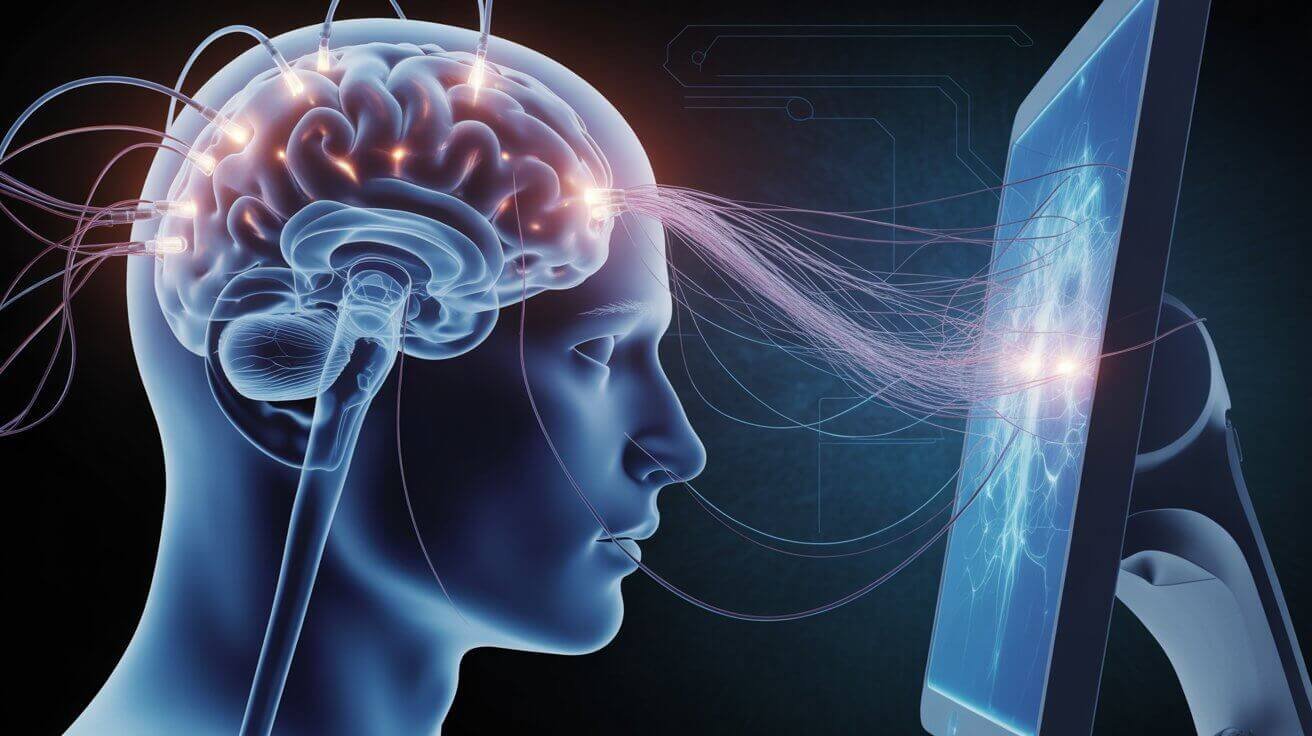

Brain-computer interfaces are no longer science fiction—they’re sitting in people’s heads right now. But as we connect our brains to machines, we’re entering uncharted territory where neural privacy and human autonomy hang in the balance. The question isn’t whether this technology will reshape humanity, but whether we’re prepared for the profound ethical implications that come with reading and manipulating our most intimate thoughts.

Neural privacy represents one of the most pressing concerns in our connected age. When devices can decode brain signals and potentially influence neural activity, the very essence of mental freedom faces unprecedented challenges. Moreover, these brain-computer interfaces promise remarkable benefits for paralyzed individuals while simultaneously raising alarming questions about cognitive enhancement, data security, and the fundamental nature of human consciousness.

The Reality of Brain-Computer Interface Technology

Understanding brain-computer interfaces requires looking beyond the hype to see what’s actually happening inside laboratories and operating rooms today. Furthermore, the technology operates through sophisticated electrode arrays that record neural signals and translate them into digital commands.

Neuralink has successfully implanted its first three human patients, with initial results showing promising neuron spike detection. The first patient, paralyzed from a spinal cord injury, can now control computer mice and play video games using only his thoughts. Additionally, the second recipient has been using computer-aided design software to create 3D objects, while the third patient continues advancing through early trials.

How Neural Privacy Gets Compromised

Brain-computer interfaces create unprecedented vulnerabilities in neural privacy through multiple pathways. Read-out BCIs have the capability to acquire not only the user’s brain data but also various other physiological and behavioral information, subsequently formulating specific inferences about the user’s brain activities or thoughts. Consequently, these systems can potentially reveal our deepest mental processes without our explicit consent.

The technology doesn’t just read intended commands—it captures the background noise of consciousness. Neural signals contain far more information than what users intend to share, potentially exposing emotions, memories, and subconscious thoughts. Even more concerning, researchers have demonstrated that attackers carefully design “brain spyware” malware to locate and tap into user’s private data, including their financial and facial information.

The Enhancement vs. Medical Treatment Divide

Society faces a critical decision about where to draw ethical lines between therapeutic applications and human enhancement. Medical uses of brain-computer interfaces clearly serve compelling interests—helping paralyzed individuals regain mobility and communication represents humanitarian progress that few would question.

However, the enhancement debate becomes murky when we consider healthy individuals seeking cognitive improvements. BCIs may hold great promise for nonmedical purposes to unlock human neurocognitive potential, focusing specifically on invasive enhancement BCIs. Yet these applications raise fundamental questions about fairness, identity, and what it means to be authentically human.

The Slippery Slope of Cognitive Enhancement

Enhancement brain-computer interfaces could create unprecedented social stratification. Wealthy individuals might access cognitive upgrades unavailable to others, effectively creating a two-tiered humanity where neural privacy becomes a luxury good. Furthermore, workplace pressures could make cognitive enhancement feel mandatory rather than optional, undermining genuine consent.

The technology also challenges personal identity in ways we’re only beginning to understand. When external computers become integrated with brain processes, determining where the person ends and the machine begins becomes philosophically complex. Likewise, our sense of agency and free will faces disruption when artificial systems influence neural activity.

Current Ethical Frameworks Are Insufficient

Traditional bioethics principles—autonomy, beneficence, non-maleficence, and justice—provide starting points but fail to address the unique challenges of neural privacy. The unprecedented direct connection created by BCI between human brains and computer hardware raises various ethical, social, and legal challenges that merit further examination.

Informed consent becomes particularly problematic when users cannot fully understand long-term implications of brain modification. Additionally, the technology’s complexity means that even researchers struggle to predict all potential outcomes, making truly informed consent nearly impossible.

Privacy Rights in the Neural Age

Neural privacy demands new legal protections beyond existing data privacy laws. Neural data are uniquely sensitive due to their most intimate nature, posing unique risks and ethical concerns especially related to privacy and safe control of our neural data. Current regulations like GDPR weren’t designed for brain data, creating dangerous legal gaps.

Brain information differs fundamentally from other personal data because it reveals the content of consciousness itself. Therefore, neural privacy violations could expose not just what we’ve done, but what we’ve thought, felt, and intended. This level of intrusion exceeds any previous technological capability.

Real-World Applications and Their Dilemmas

Examining specific use cases reveals how ethical challenges manifest in practice. Gaming applications might seem harmless, but they normalize brain monitoring and create databases of neural patterns. Similarly, workplace brain-computer interfaces could improve safety while enabling unprecedented employee surveillance.

Educational applications present particularly complex dilemmas. BCIs can track student attention, identify students’ unique needs, and alert teachers and parents of student learning progress. While potentially beneficial for personalized learning, these systems could also pressure students into constant neural monitoring, undermining mental privacy during crucial developmental years.

The Military and National Security Dimension

China has an advantage through BCIs in the military sector, and the superiority of the United States over other nations in the military field appears to be threatened because of the speed and manner in which related research is conducted in other countries. This international competition adds urgency to ethical considerations, as nations might prioritize technological advancement over neural privacy protections.

Military applications could fundamentally alter warfare and intelligence gathering, creating pressures for rapid deployment despite unresolved ethical concerns. Consequently, soldiers might face coercion to accept brain modifications that compromise their neural privacy for national security purposes.

Practical Steps for Protecting Neural Privacy

Creating meaningful protections for neural privacy requires immediate action across multiple fronts. Regulatory frameworks must evolve quickly to match technological capabilities while ensuring innovation continues within ethical boundaries.

First, we need explicit neural rights legislation that establishes brain data as a special category requiring heightened protection. Second, technical standards should mandate user control over neural data collection, storage, and sharing. Third, oversight bodies must include neuroscientists, ethicists, and affected communities—not just technologists and corporations.

Individual Strategies for Neural Privacy

While systemic solutions develop, individuals can take steps to protect their neural privacy. Research any brain-computer interface thoroughly before use, understanding both stated capabilities and potential misuse. Additionally, demand transparency from companies about data collection, storage, and sharing practices.

Support organizations advocating for neural rights and ethical neurotechnology development. Furthermore, engage in public discussions about acceptable uses of brain-computer interfaces, as these conversations will shape future regulations and social norms.

Future Implications: Where Neural Privacy Leads Us

The trajectory of brain-computer interface development suggests we’re approaching a pivotal moment for human consciousness and privacy. We can expect that more and more types of injuries and diseases will be treated with BCIs, clearly posing challenges for privacy as machines are able to tap into our private brain processes.

Within the next decade, brain-computer interfaces will likely become more sophisticated and widespread. Consequently, neural privacy protections established now will determine whether this technology empowers human flourishing or enables unprecedented mental surveillance and control.

The stakes extend beyond individual privacy to encompass human agency itself. If we fail to establish robust neural privacy protections, we risk creating a future where thoughts become commodities and mental freedom becomes historical curiosity.

Conclusion: Balancing Innovation with Neural Privacy

Brain-computer interfaces represent both humanity’s greatest technological opportunity and its most profound ethical challenge. These devices offer genuine hope for treating paralysis, depression, and neurological disorders while simultaneously threatening the sanctuary of human consciousness.

The path forward requires acknowledging that neural privacy isn’t just another privacy concern—it’s fundamental to human dignity and autonomy. We must develop brain-computer interfaces thoughtfully, with robust protections built into the technology from the beginning rather than added as afterthoughts.

Success means creating a future where brain-computer interfaces enhance human capabilities while preserving the neural privacy that makes us uniquely human. Failure could mean losing something irreplaceable: the privacy of our own minds. The choice is ours, but the window for making it wisely is rapidly closing.